Let’s Fly Inside #5: An Interview With the Leading AI & ML Specialist at Fively

Discover everything about machine learning and artificial intelligence development services at Fively in a fresh interview with our top AI and ML engineering specialist.

Artificial Intelligence has now become one of main Fively’s specializations: we develop robust virtual assistants, highly customized chatbots, sales process automation tools based on data science algorithms, ML-enabled computer vision tools, and even complicated AI-based predictive models to improve decision-making across businesses.

But all these high-tech AI solutions seem to be so complex. How did we manage to make it this way so fast? And what objectives do we set for the next year in this direction? Today we’re lucky to have a sincere talk about all these topics with our leading AI&ML specialist, Andrew Oreshko. Take a short break, make yourself a cup of tea or coffee, and let’s start our journey into the AI world!

Hi, Andrew! Thank you for finding the time for this interview, as AI is a hot topic right now! Can you first tell us a bit about your background and what led you to AI and machine learning?

Hey, Alesya! Sure! Firstly, as for my fundamental background, I originally started as a frontend AngularJS engineer. A couple of years later I advanced to backend development on Node.js and Python (Python for the most part), and from that moment on was mainly involved in various Python-based projects where I developed APIs, integrations with APIs, optimizing the systems and database load, and the number of other complex things.

Secondly, as concerns my ML path and experience, it has always been interesting to me since the early days of university studies. I began exploring this area a long time ago by reading articles about past trends in the AI industry, like when the ImageNet 2015 contest happened, and so on.

Also, I tried to develop simple computer vision stuff using my laptop and shallow knowledge of TensorFlow. I liked the idea of such work and decided that at some moment in the future I have to get some real-world experience in data science.

Wow! That’s impressive! And can you please explain to us, what does a Data Engineer do vs. a Data Scientist?

Alright, that may vary from company to company, from project to project, but when people ask me, I always say that Data Engineer primarily works in the engineering domain, executing tasks like the development and supporting of databases, data warehouses, and data pipelines.

Data pipelines include a lot of stuff you can do with your data: consuming, cleansing, formatting, transforming, ingesting, and resulting into something that can be used by data scientists, but that’s for later. Data Engineers also deploy and maintain things like AI models (built by Data Scientists) into production.

Data Scientists, in turn, find specific patterns in data, test hypotheses, build predictive models, use data of any kind to solve business problems, answer various business questions, and so forth. They can use data prepared by Data Engineers, or help them write any data pipeline scripts. Scripts that run statistics written by DS might also be run as a part of the data pipeline for instance.

Data Engineer primarily works in the engineering domain, executing tasks like the development and support of databases, data warehouses, and data pipelines. Data Scientists, in turn, find specific patterns in data, test hypotheses, and build predictive models, using data of any kind to solve business problems.

I trace myself more to a Data Scientist, but all in all, these two roles are somewhat intertwined, they work together to make data clean, coherent, and apprehensible.

By the way, let us congratulate you on passing the Developer Certificate exam on ML skills from TensorFlow! Is it hard to get TensorFlow certification? And is the TensorFlow certificate free?

Cheers! TensorFlow is now the most popular open-source AI library for dataflow and differentiable programming developed by the Google Brain team and written with the help of Python, C++, and Java.

It's primarily used for machine learning applications and deep neural network research. It’s also ideally suited for large projects due to a developed ecosystem of products (TensorFlow Hub, TensorFlow Serving, TensorFlow Lite), great community, detailed documentation, and lots of code examples over the internet.

Speaking about TensorFlow certification, it’s not free, but it is quite a bargain: 100$. Can’t say anything about complexity or difficulty other than that it’d take one’s time to prepare for sure. More details on requirements, terms and conditions, and prerequisites can be found on TensorFlow’s official website.

In your opinion, what is the best framework for AI development in 2023?

There are 2 AI market leaders now: TensorFlow, which is best for large projects, and PyTorch, which is ideal for smaller projects and for research. Both tools have great communities, documentation, and lots of code examples.

As for Sci-kit Learn, Pandas, and Numpy - they are the core of ML projects: convenient both for research and for building production-ready systems, they will continue to be used everywhere and to develop further.

But, when choosing the tool, you should rely on the experience of your developers and the needs of your business.

There are 2 market leaders now: TensorFlow, which is best for large projects, and PyTorch, which is ideal for smaller projects and for research.

By the way, can you explain simply what is the main difference between AI, ML, and DL?

Well, AI is a general term that determines systems that are capable of performing tasks or making decisions without direct programming. Standard software is a bunch of consecutive computation steps that use rules defined by a programmer, whilst the AI system is something that is able to create those rules of the training data by itself and solve the problem without explicit programming.

ML is a subset of AI where machine learning algorithms take place. DL, in turn, is a subset of ML where specifically the neural networks are utilized to do stuff.

AI system is something that is able to create those rules of the training data by itself and solve the problem without explicit programming.

Is Python the best language for machine learning? What other tools and technologies are common in this field?

Python is still the de-facto most used technology in AI right now. It has all the required libraries for AI implementation, which do big-data processing, ML, DL, etc. (like TensorFlow, Scikit-Learn, and so forth), and, what’s also important, it’s a piece of cake to learn it. C++ is used in a bunch of ML/AI libraries internally (TensorFlow and Pytorch, and much of Numpy is written by using C++. which makes it fast at computing).

Regarding Scala, it can be highly valuable when one needs to work with big data on Spark (but that can be a separate topic for discussion). Julia is indeed taking off now in 2023, as it has several advantages over Python: its execution is considered much faster than Python, and it has built-in support for math expressions. In addition, it can call Python functions, which might make the transition from Python to Julia easier in future products, but let’s see how it’ll evolve over the next few years.

Julia is taking off now in 2023, as it has several advantages over Python: its execution is considered much faster than Python, and it has built-in support for math expressions.

In general, what is machine learning currently used for?

AI and ML have a lot of applications from which both businesses and people can benefit. I’ll list a couple of those. AI is used heavily day to day in the finance industry, offering suitable services both to business owners and customers to automatically identify stock purchase/sale opportunities.

It’s also widely used in the media and entertainment field, basic examples here are smart assistants like Siri, Alexa, and Google Assistant, preference-based recommendation systems, and social media with automatic friend tagging and recommendations as well.

ML plays a vital role in healthcare too: cancer detection is a usage example of image recognition in healthcare. Machine learning algorithms help doctors diagnose diseases, predict patient deterioration, or even suggest treatment plans by analyzing medical images, genetic data, or electronic health records.

Both eCommerce and agriculture domains use price prediction tools that analyze multiple factors to suggest the best price for products, revenue level, and other things.

What field of ML is the most interesting for you?

I really like to work with images, so of course I’d say it’s Computer Vision. By the way, TF object detection is one of the Python packages that allows you to easily and quickly create object detection systems based on pre-trained models.

In my practice, I used it in a pet project to detect credit cards from a mobile phone camera. In this project, I used a pre-trained FRCNN model for object detection, which was further trained by me on my own custom-built dataset from photos of my old credit cards. In this small project, the approximate accuracy of object detention was about 80%.

You’ve been working at Fively since its first days as a company. Is AI a priority for Fively right now?

While Fively's main focus is still web development of services of any kind, AI/ML is not a primary goal of the company. However, we’re moving rapidly in this direction: we ran an initiative to educate our peers on some AI techniques to get broader experience over Fively’s employees and to bring more interest of this kind into the company.

I myself was involved in building a learning path with Igor, our co-founder, so let’s see how it’ll impact our AI projects.

We ran an initiative to educate our peers on some AI techniques to get broader experience over Fively’s employees and to bring more interest of this kind into the company.

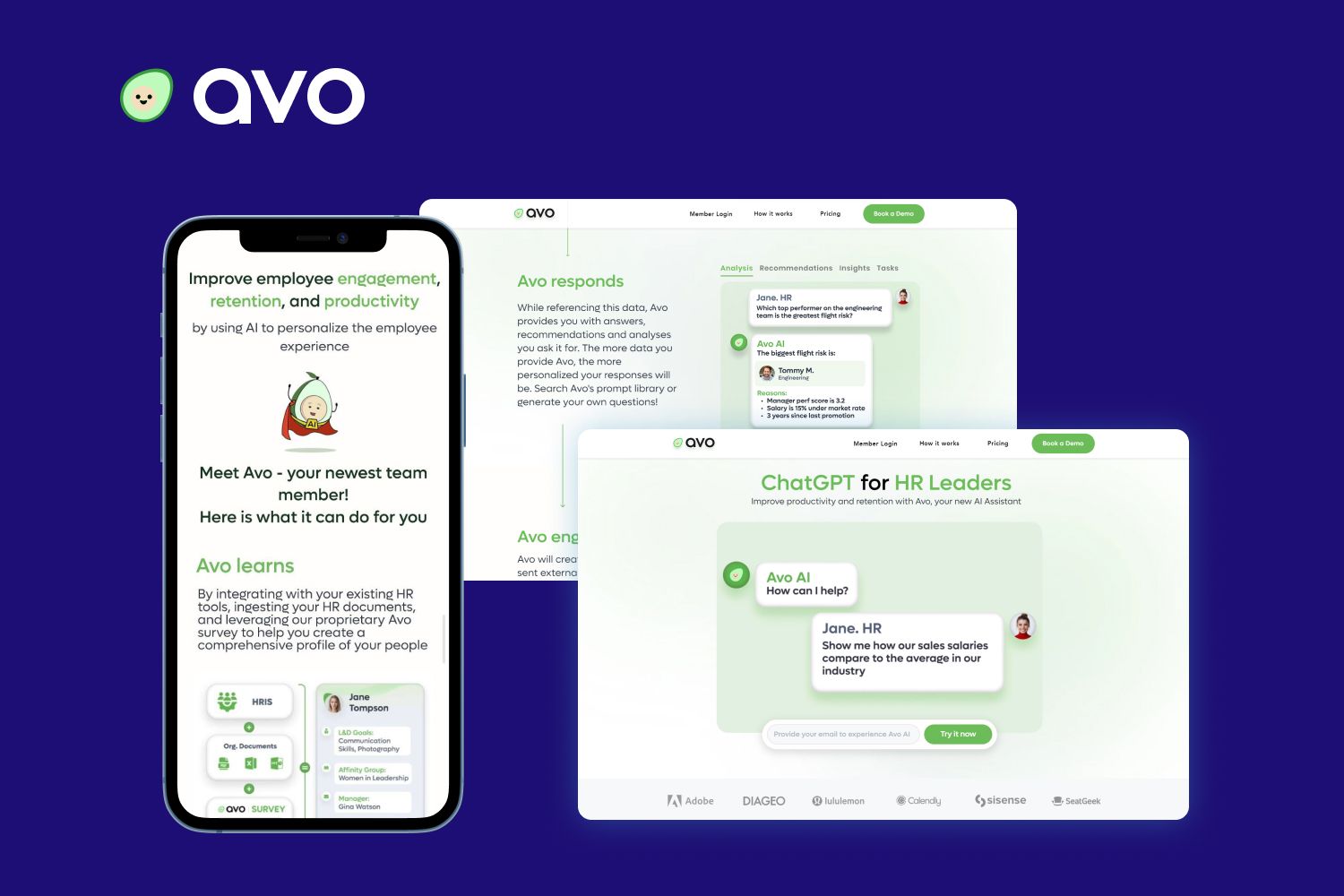

There are a couple of Fively AI-involved projects that you already know, like the Avo HR management tool, which allows 1-click visualization of HRIS and questionnaire data via the Avo AI chatbot as a personal HR assistant.

We also developed a robust ML chatbot for a large AR platform headquartered in Europe, which allows us to automate up to 65% of conversations and increase the response rates by up to 40%.

And I cannot but mention an AI-based marketing automation tool Fively created for predicting customers' interests, revenue rates, and effective ad campaign management.

But this is only the beginning, ‘cause we have a bunch of upcoming projects where we’re up to construct AI solutions for our customers. For example, during one of them, we implemented a complex classification of documents in PDF format via an ML algorithm. Another AI project is also impressive: can’t say much due to NDA, but its application field is pricing in auctions.

Wow, that’s interesting! And how has AI development evolved over time? I mean, all these modern platforms like ChatGPT or Midjourney, is it magic or what?

ChatGPT (which stands for Generative Pre-trained Transformer) is a Generative AI that is backed by the GPT model. It is able to append words (tokens) based on the provided context.

Transformer is a top-notch type of neural net that was invented in 2017 when the “Attention Is All You Need” paper came out. This is a brilliant idea that turned out to be one of the most impactful findings in the AI world, not to mention that Transformers can perfectly solve a huge array of Computer Vision tasks besides NLP surely.

Transformers is a brilliant idea that turned out to be one of the most impactful findings in the AI world.

Using ChatGPT, businesses of various industries can build their own GenAI products, just like we did in the Avo project, where Fively created a breakthrough HR automation tool that boosts employee engagement, performance, and satisfaction in businesses of all sizes.

Midjourney is a perfect example of Generative AI as well, but it solves the other kind of a problem: I believe everyone knows and tried this text-to-image neural net at some point already. It is viral now in 2023 – the quality of produced photos is higher than ever.

However, while we may categorize AI in many different ways, we need to understand that any AI system in-depth is nothing but applied math and statistics, where you basically use existing data to make decisions. Even such immense and sophisticated models like GPT (which powers the ChatGPT) are basically statistical models that compute the probability distribution of a word sequence, predicting the next words based on given sequences that the model has been fed with. So actually no magic.

While we may categorize AI in many different ways, we need to understand that any AI system in-depth is nothing but applied math and statistics.

What is the most used form of Artificial Intelligence now?

I wouldn’t say there is the “most used form” due to the fact that any certain algorithm serves its own purpose/purposes. A simple example here would be gradient boosting which works on structured data like tables and is able to classify or perform regression.

On the other hand, Faster R-CNN (specific deep convolutional network type called Region-Based Convolutional Neural Network) proves itself well on object detection tasks (image is an unstructured data).

So, both are needed, and that’s applicable to any type of ML/DL algorithm. Let’s take a look at some basic types of them:

- Linear Regression - say we have an x/y plot where x is an independent variable and y is a dependent one, thus y value depends on x. The LR algorithm is used to fit the straight line over this plot through (x,y) points. The line is then used to infer the y-value from the unforeseen x-value;

- Decision Trees - almost never used as standalone ML algorithms. Primarily used as a building block for other systems, like Random Forest or Gradient Boosting ensemble. This algorithm is used for both classification and regression tasks, as it recursively splits the dataset into subsets based on the most significant feature at each node, ultimately leading to the prediction of a target variable;

- KNN - it is a supervised machine learning algorithm that classifies or predicts a new data point's category based on the majority class among its k nearest neighbors in the training dataset, where "k" is a user-defined parameter;

- K-Means Clustering - an unsupervised clustering algorithm that separates data into groups (clusters) so that data points from each cluster are close to each other;

- Logistic Regression - essentially the same as LR, but used for classification. The formula is basically the same, but the results are passed to the logistic function (sigmoid function) to compute the probability of the class, usually 0 or 1;

- Random Forest - the ensemble of decision trees that can be used for both regression and classification. The idea is to build a set of very deep trees in parallel, each of them predicting some value. Then for regression, we get, for instance, the mean of results from each tree that gives an outcome, and for classification - the mode (most recurring value from an array of results). This is a more efficient algorithm than a bare Decision Tree, ‘cause it generalizes to the unseen data much better (less overfitting) due to the set of trees instead of having just one;

- SVM - mostly used for classification, works by finding a hyperplane (decision boundary) that maximizes the margin between the two classes. In other words, it works by identifying the data points (support vectors) closest to the decision boundary, which is critical for determining the optimal separation between classes;

- Naive Bayes - commonly used for classification. One can use Bayes' theorem to classify data based on the probability of a given instance belonging to a particular class. It assumes that the features used for classification are independent of each other, which simplifies the calculation of probabilities.

Random forest is a more efficient algorithm than a bare Decision Tree, ‘cause it generalizes to the unseen data much better due to the set of trees instead of having just one.

AI is widely used for building chatbots and online twins. Do people (and you particularly) like chatbots?

I personally do not find it quite helpful for me to use any kind of chatbot, but I can't say much about other people. Although, sometimes I use ChatGPT in my day-to-day work since it works very well providing ideas on how to solve different kinds of problems.

But if I find some kind of voice speaker with built-in LLM and voice search available, like it is in the ChatGPT, I would certainly buy such a speaker.

There are lots of concerns with AI: people are afraid that it spread their personal data and even end human existence. How can you comment on such predictions?

This is not an AI/ML issue: you don’t necessarily need an ML algorithm to steal personal data. In general, I have a bad attitude towards theft of personal data of any kind, unless, of course, people themselves consent to their data processing.

About the possible end of humanity, again, this is not a question for AI/ML development, because everything depends on humanity's goals: even without these technologies, the world can be destroyed in many ways. As I’ve already said, AI is just another application of mathematics and statistics, and the way we use it depends on us: you can use it to do harm or good deeds.

AI is just another application of mathematics and statistics, and the way we use it depends on us: you can use it to do harm or good deeds.

By the way, do you use ChatGPT, Midjourney, and other AI-based tools at work? If yes, how would you estimate their capabilities as a Data Scientist?

Yes, as I said before, I do use ChatGPT at work. It is a powerful tool that may be really helpful to find an idea of the solution to a problem you’ve never stumbled upon before. It shows itself really well in providing snippets of the code to solve some minor tasks.

As a Data Scientist, I used it to understand some neural network architectures, it is nice to get a simple explanation of some concept without digging too much for a starter, but then it is actually really valuable to sit and scrutinize what’s going on on deeper level by checking out scientific papers as it gives more broader understanding of any concept.

It’s worth mentioning, that every bit of information that’s returned from ChatGPT, has to be verified, because as I told it is a probabilistic machine, so we need to keep in mind that replies might be totally incorrect.

What AI and ML trends, like the rise of Decision Intelligence or AI twins will influence custom software development in 2024? How will AI impact the Metaverse?

In general, I can’t say much here, as I’m more about building ML algorithms than discussing the philosophy of AI and the upcoming changes in software engineering. But as for Metaverse, I imagine it as an AR/VR space, where a person will be able to control one’s avatar somehow. So I believe that AI and specifically computer vision techniques could provide assistance to create an accurate avatar that will look and behave just how you want it to do, and I believe that might be crucial for some people.

For example, I know that both Meta and Apple have already launched mixed reality headsets that they claim to be suitable for entertainment learning, and gaming tasks, and I think that in the future we will face the rise of fully-fledged digital twins.

How AI will shape the future of work? Is it possible that people won’t work at all?

My view is no, people will always work because I truly believe that only people will ever have critical thinking and imagination capability which is the key to new knowledge.

On the other hand, AI will take over lots of human activities and will help us to further develop science and technology, but the key role will always be played by human beings. That’s the only symbiosis that I can think of, putting aside all sci-fi ideas 🙂

As for software engineering, AI will be capable of solving any kind of simple tasks or projects, but when it comes to developing any custom project, human participation is absolutely essential.

What inspires you in your work as an AI and ML specialist? How do you keep your passion alive and manage your workload in such a rapidly changing field?

Everything is very simple: you need to find an area that you like, where you are truly interested in continuous improvement. As soon as you find it, you will suddenly have time to study it.

And, vice versa, if you have a feeling that you are too lazy to do something, or you have free time but you still don’t do it, then it’s not something quite right for you and you still need to search for it.

Regarding ML, you can research/invent new algorithms, as well as simply study and immerse yourself in the world of algorithms - this will definitely develop your brain because there is a lot of mathematics. Speaking about me, I am keen on Computer Vision and I love to spend time studying it.

Everything is very simple: you need to find the area that you like, where you are truly interested in continuous improvement. As soon as you find it, you will suddenly have time to study it.

Speaking about my working routine, I don’t have any special rituals, I just try to follow a comfortable regime: I usually get up at 9-10 am and work until 8 pm. After that, I take a small break for household chores, and then I spend about 2 hours doing ML self-development. Here I mostly mean watching specialised YouTube and Telegram channels, like:

Outside of work, what hobbies or activities do you enjoy? How do you balance your work and personal life?

To my mind, I don’t have any kind of burnout, as I really like what I do and I don’t need to stimulate myself somehow to study it. When a deadline approaches and I don’t have enough time, my only secret of dealing with it is simply to eat healthy and well, because the right food can give us a lot of energy and improve our well-being. I think that one should put health as a priority, and never neglect your basic needs like drinking enough water, sleeping, having meals at the right time, taking breaks, etc.

But the main problem I have is the lack of time. I would say that there are 3 main layers in my life that I care about: work, communication with kith and kins, and music (playing the guitar in particular, I truly love it). And I try to find the time for each part, but sometimes it can be difficult.

Also, I dream of traveling, so I’d like to start traveling a lot in the near future. Plus, I love snowboarding, but I rarely do it, so probably traveling will help me to do it more often.

Looking ahead, what are your further professional goals in AI and ML?

It's quite difficult to say about my future plans, as I’m not planning to get new certificates or courses right now. Instead, I want to concentrate on reading technical articles about Data Science, because sometimes it’s really quite hard.

At the end of our interview, could you give some advice on how to learn AI and Machine Learning for beginners?

I would choose a project that is of interest to a person who wants to learn something and try to implement it by any means available. That alone will help you gain a lot of experience and knowledge. Once the person has one’s hands dirty, it is time to get deeper into technical stuff: my advice would be to learn basic ML algorithms which are outlined above, along with calculus and probability theory which lies in the foundation of all ML and DL algorithms.

In a nutshell: try to implement something and then try to understand it deeper. Math is the best friend here, ‘cause it is the framework of all data science.

***

Thank you for reading this fascinating interview till the very end! Although it’s over, Fively’s AI&ML journey has only begun! Feel free to share your thoughts on this paper in our social networks and please remember that our AI specialists are always ready to help you with the application of your dream! Let’s fly!

Need Help With A Project?

Drop us a line, let’s arrange a discussion